This post describes my final configuration for making the “Previous Versions” fileshare feature work for Windows 10 clients connecting to a FreeBSD Samba server backed by ZFS, as well as how I got there. It involved reading through Samba documentation, code, and various posts on the internet, of which this mailing list entry was the most helpful, to figure out why things didn’t work as expected/documented, and how to circumvent that.

I’m using the Samba VFS module shadow_copy2 to achieve this, and have some observations about this module:

- It has an unintuitive shadow:delimiter paramter – the contents of this parameter has to be at the beginning of the shadow:format parameter. It cannot be unset/set to blank, and it defaults to “_GMT“.

- The shadow:delimiter parameter cannot start with a literal “-“, and the value of the parameter can’t be quoted or escaped. As an example, “\-” or “-” doesn’t work, whether they are quoted or not in the config.

- According to documentation, shadow:snapprefix supposedly only supports “Basic Regular Expression (BRE)”. Although this module supports something which looks like simplified regular expression, it does not seem to support BRE. It also requires all special regular expression characters like ({}) (and possibly others) to be escaped with a backslash(\) character, even though the characters are part of the regex pattern and not to be treated as a literal character. This is not how regexes usually work.

- I have not been successfull in using the circumflex/”not”(^) operator, the valid characters (“[]”) operator, the “0 or more” (*), nor the “one or more” (+) operators in regex here.

As such, I had to tweak my snapshot names to have a common ending sequence. The path of least resistance here was to make them all end on “ly” – such as “frequently“, “daily” etc. I also had to spell out every possible snapshot name in the regex.

Working smb.conf

I use “sysutils/zfstools” to create and manage snapshots on my FreeBSD file server, and I have configured it to store snapshots with date/time in UTC. As such, all snapshots are named in the pattern “zfs-auto-snap_(name_of_job)-%Y-%m-%d-%Hh%MU“. As an example, the “daily” snapshot created at 00:07 UTC on 28th December, 2020 is named “zfs-auto-snap_daily-2020-12-28-00h07U“.

[global]

# Previous History stuff

vfs objects = shadow_copy2

shadow:snapdir = .zfs/snapshot

shadow:localtime = false

shadow:snapprefix = ^zfs-auto-snap_(frequent){0,1}(hour){0,1}(dai){0,1}(week){0,1}(month){0,1}$

# shadow:format must begin with the value of shadow:delimiter,

# and shadow:delimiter cannot start with -

shadow:delimiter = ly-

shadow:format = ly-%Y-%m-%d-%Hh%MU

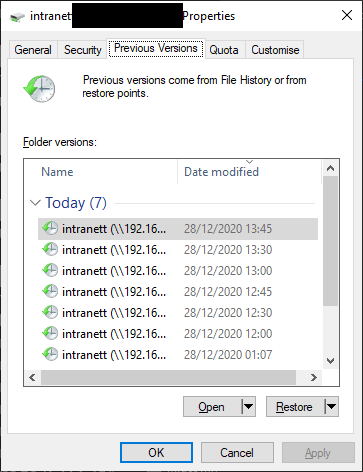

Example Result

Example output, with a file server in UTC and client in UTC+0100:

Updates to this blog post

- 2020-12-29

- Removed incorrect “+” in vfs objects directive.

- Moved a paragraph from the ingress to the smb.conf subheading