These are the results of this weekends benchmarking! I’ve tested a single-drive UFS2 file system, and compared it to several ZFS configurations (single and multi-drive). For all the juicy details on configuration and testing methods, please see FreeBSD: Filesystem Performance – The Setup.

dd results

I’ve used dd to test sequental read/write performance – and since the system was rebooted between each test step, these graphs depict the observed performance when the data is not stored in cache beforehand.

Overall, RaidZ+Cache is slightly slower than plain RaidZ, which is likely caused by the extra workload associated with updating the cache. Since these tests are run with an empty cache, it has to be populated along the way – generally reducing the performance in the dd tests. UFS2 has better throughput than single-drive ZFS which is expected due to the extra work performed to by ZFS guarantees data integrity.

Larger values are better.

ZeroWrite shows the raw sequental write performance of each configuration, where single-drive UFS2 ends up above single-drive and mirror ZFS as expected. UFS2 has slower performance than the remaining ZFS configurations.

Read + Write: I am surprised at how close RaidZ and Stripe are in these tests, since RaidZ is writing actual data to two drives and checksums to a third at any given time, while the stripe writes data to all three drives at the same time. UFS2 is doing a decent job, staying slightly ahead of single-drive ZFS.

Read: Stripe and mirror show their strong side here, while UFS is doing pretty well, considering it’s working off of a single drive. I do not know what’s causing the significant performance difference between the 3-way mirror and tripple-drive stripe, as I would expect both configurations to read from all three drives.

RandomWrite: This test takes input from /dev/random, and still writes it sequentially to a file. Since all ZFS configurations have roughly the same performance, it seems like the bottleneck in this test is /dev/random, and should be discarded. Update: /dev/random is indeed the bottle neck. The performance test showed it manages to generate data at roughly 72MB/s.

Larger values are better.

As the buffer size increase, the similarity in performance between RaidZ and RaidZ+Cache are almost unbelievable. Small data operations seem to put huge brakes on the ZFS performance. The remaining numbers are as expected. Again, RandomWrite should be discarded as the data source seems to be the major bottle neck.

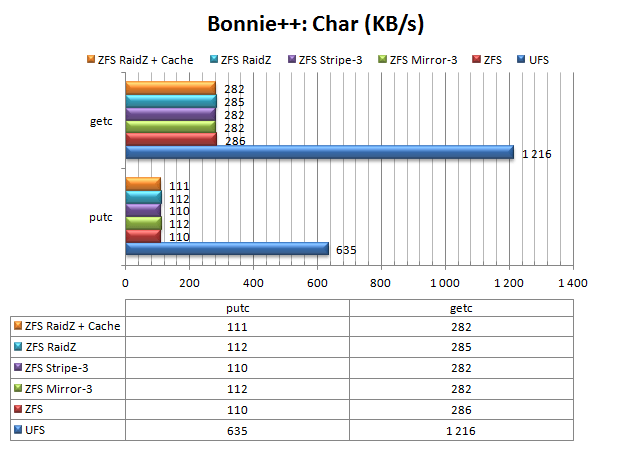

Bonnie++: General Performance

Larger values are better.

Both file systems seem to struggle with single-byte operations, but UFS2 performs between 4 and 6 times better than ZFS in this test.

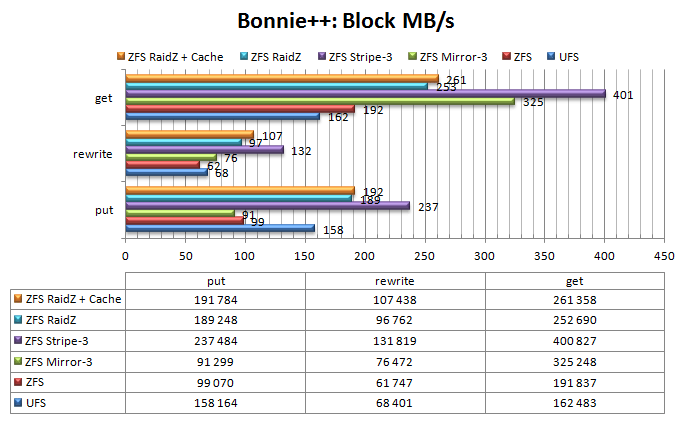

Larger values are better.

As expected, UFS2 performs better than the single-drive and mirror ZFS configurations in this test, and the stripe configuration performs an order of magnitude better than the other configurations.

This test also displays a noticeable performance boost for RaidZ+Cache over normal RaidZ.

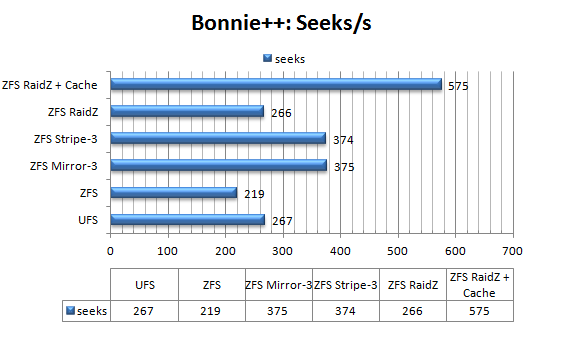

Larger values are better.

UFS2 excels at two tests: sequental and random stat (according to bonnie++ manpage, this is “reading/stating files”).

All ZFS configurations are better in every other test. It is noteworthy that RaidZ performs better than RaidZ+Cache for sequental stat’ing, while RaidZ+Cache performs better for random stat’ing.

Larger values are better.

As expected, UFS2 is faster than single-drive ZFS in this test. This test also reveals a significant performance boost to RaidZ+Cache over plain RaidZ.

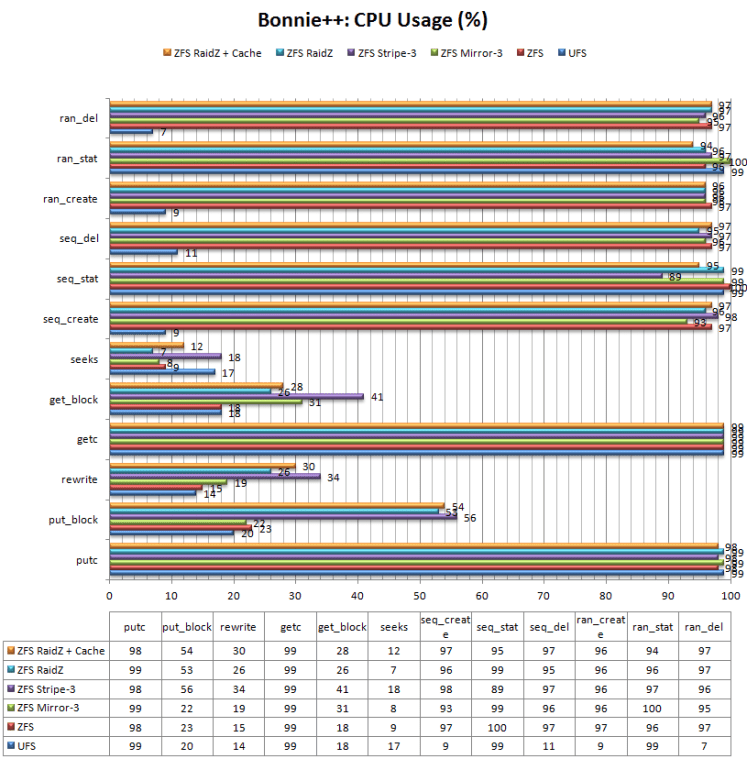

Lower values are better.

The observed differences in CPU usage does not seem to make much sense, and may be within error margins. Disagree? Please let me know in comments!

Bonnie++: Latency

Lower values are better.

UFS2 is faring well with the block and character read/write latencies, while most of the ZFS configurations have significant latency when writing.

|

|

|

|

|

|

And that was all!

Spreadsheet used to collect information & generate graphs: FreeBSD-FS-Performance-Spreadsheet